Food Computation

Contents

- 1 Motivation and Background

- 2 Projects

- 2.1 1.Explainable Recommendation: A neuro-symbolic food recommendation system with deep learning models and knowledge graphs

- 2.2 2.Ingredient Substitution: Identifying suitable ingredients for health condition and food preferences

- 2.3 3. mDiabetes: A mHealth application to monitor and track carbohydrate intake

- 2.4 4. Ingredient Segmentation: Using generative models for dataset creation and ingredient segmentation

- 2.5 5. Representation Learning: Cross-modal representation learning to retrieve cooking procedure from food images

- 3 Team Members

- 4 Publications

Motivation and Background

Over the recent few years, people have become more aware of their food choices due to its impact on their health and chronic diseases. Consequently, the usage of dietary assessment systems has increased, most of which predict calorie information from food images. Various such dietary assessment systems have shown promising results in nudging users toward healthy eating habits. This led to a wide range of research in the area of food computation. Currently, at AIISC, the following projects are being carried out in the realm of food computation.

Projects

1.Explainable Recommendation: A neuro-symbolic food recommendation system with deep learning models and knowledge graphs

In this work, we propose a food recommendation system (Figure-1) that employs an analyser to recommend whether the food is advisable to the user and a reasonser that provides an explanation for the decisions made by the analyser. The recommendation system harnesses generalization power of deep learning models and abstraction power of the knowledge graphs to analyze recipes. The several knowledge graphs involved in this work are

- Personalized health knowledge graph

- Disease specific knowledge graph

- Nutrition retention knowledge graph

- Cooking effects knowledge graph

Given a food image, the system will retrieve cooking instructions and extract cooking actions [2]. The ingredients and cooking actions will be analyzed with knowledge graphs and inferences will be drawn with respect to individual’s health condition and food preferences. We plan to extend the recommendation system to suggest alternate ingredients and cooking actions.

2.Ingredient Substitution: Identifying suitable ingredients for health condition and food preferences

In this project, we propose to build an ingredient substitution knowledge graph to identify suitable ingredient substitution in case of allergens, health conditions, food preferences and missing ingredients. This project is in its data collection phase.

3. mDiabetes: A mHealth application to monitor and track carbohydrate intake

In this work, we developed a mobile health application to track carbohydrate intake of type-1 diabetes patients. The user will enter the food item name and their quantity in their convenient units. The app will query the nutrition database, perform necessary computation to convert the user entered volume to estimate the carbohydrates.

Resources

The app is under development to include food image-based carbohydrate estimation which requires food image based volume estimation.

4. Ingredient Segmentation: Using generative models for dataset creation and ingredient segmentation

In order to perform food image based volume estimation, the food image needs to be segmented and ingredients need to be identified. We propose to train an ensemble model - (i) an object detection model to segment visible ingredients (ii) Visual transformer model to detect invisible ingredients. However, the lack of food segmentation dataset poses a challenge. To overcome this, we propose a novel pipeline utilizing generative models to generate labeled food segmented dataset. We utilize this dataset to train the ingredient segmentation model. The sample dataset generated using DIffusion Attentive Attribution Maps (DAAM) models are presented in Figure-2.

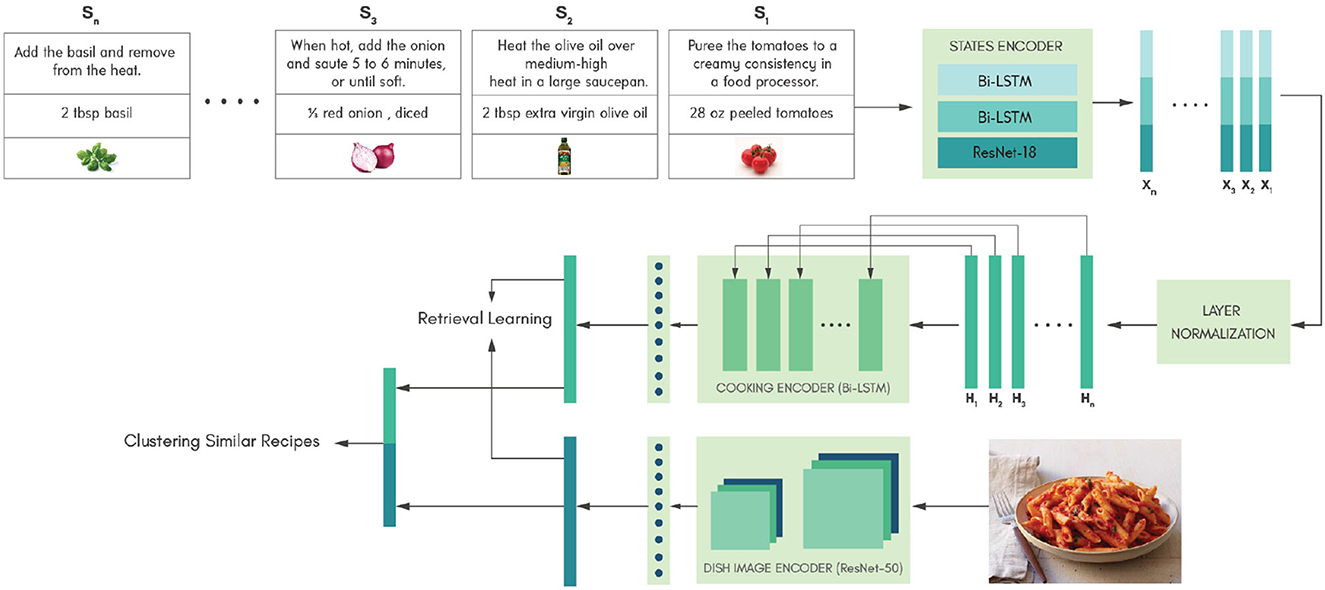

5. Representation Learning: Cross-modal representation learning to retrieve cooking procedure from food images

To support the explainable recommendation system (Project-1) in retrieving cooking instructructions from food images, we proposed a cross modal retrieval system described in Figure 4. In this work, we leverage knowledge infused clustering approaches to cluster similar recipes in the latent space [1]. Clustering similar recipes enables retrieval of more accurate cooking procedures for a given food image. Currently this network architecture is being enhanced and tested using transformer architectures.

*Architecture of Ki-Cook

Team Members

Coordinated by - Revathy Venkatramanan

Advised By - Amit Sheth

AIISC Collaborators:

- Kaushik Roy

- Yuxin Zi

- Vedant Khandelwal

- Renjith Prasad

- Hynwood Kim

- Jinendra Malekar

External Collaborators

Students/Interns

- Kanak Raj (BIT Mesra)

- Ishan Rai (Amazon)

- Jayati Srivastava (Google)

- Dhruv Makwana (Ignitarium)

- Deeptansh (IIIT Hyderabad)

- Akshit (IIIT Hyderabad)

Professors/Leads

- Dr.Lisa Knight (Prisma Health - Endocrinologist)

- Dr. James Herbert (USC - Epidemiologist and Nutritionist)

- Dr. Ponnurangam Kumaraguru (Professor - IIIT Hyderabad)

- Victor Penev (Edamam - Industry collaborator)

Publications

- Venkataramanan, Revathy, Swati Padhee, Saini Rohan Rao, Ronak Kaoshik, Anirudh Sundara Rajan, and Amit Sheth. "Ki-Cook: Clustering Multimodal Cooking Representations through Knowledge-infused Learning." Frontiers in Big Data 6: 1200840.

- Venkataramanan, Revathy, Kaushik Roy, Kanak Raj, Renjith Prasad, Yuxin Zi, Vignesh Narayanan, and Amit Sheth. "Cook-Gen: Robust Generative Modeling of Cooking Actions from Recipes." arXiv preprint arXiv:2306.01805 (2023).

- Sheth, Amit, Manas Gaur, Kaushik Roy, Revathy Venkataraman, and Vedant Khandelwal. "Process knowledge-infused ai: Toward user-level explainability, interpretability, and safety." IEEE Internet Computing 26, no. 5 (2022): 76-84.