RT Events On LOD

Contents

Real Time Social Events on LOD

Introduction

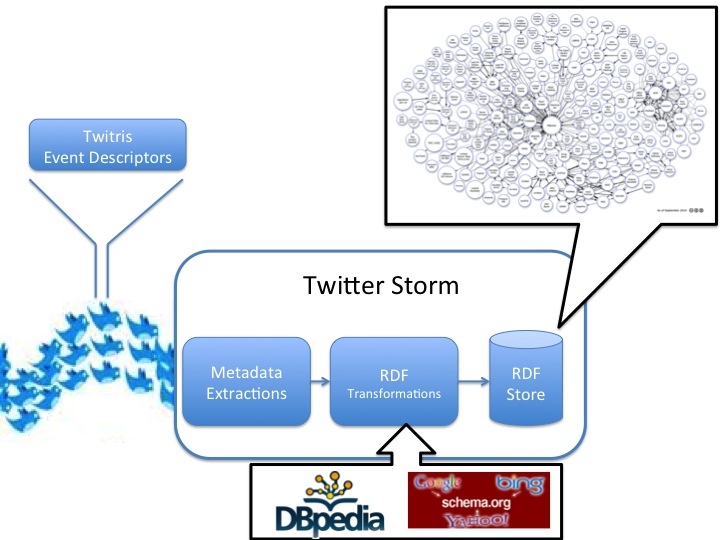

Linked Open Data (LOD) describes a method of publishing structured data so that it can be interlinked and become more useful (Wikipedia). Transforming unstructured Social data (Tweets) to structured and publishing it on LOD, will enrich its value. In this project we published Social Data related to on-going events on LOD in Real-Time. We also developed a visualization tool for event centric social data to visualize trending entities with relations from DBPedia (Graph Visualization).

In this project, we have extended Twarql to collect most of the metadata from Twitter and also extracted ,by analysis, to transform the unstructured tweet to a structured form.

Architecture

The architecture extends that of Twarql to include the extractions of metadata from the tweet using Twitter Storm. Once the metadata is extracted, the transformation of tweet to RDF is done using a light weight vocabulary (extension of the vocabulary used for SMOB). We have used DBpedia for background knowledge.

Metadata Extraction

Different Phases of work

- Finding the difference of Metadata between what twitter provides now and what are being used by Twarql

| Metadata provided by Twitter Streaming API | Metadata used by Twarql as of now |

|---|---|

|

|

Note: So there is a difference of metadata that is being used compared to that provided by Twitter Streaming API. Also it is more assuring to use the entities provided by Twitter API than relying on our algorithms.

- Coming up with a Schema for this remainder (which are not being used in Twarql)

- Extracting entities from the tweets and Finding the corresponding dbpedia-url for that metadata.

- Converting the data into RDF Triples

- Storing them into triple store -- using Virtuoso

- Publishng them on to web http://twarql.org/resource/page/post/126824738495545344.

- Accessing them using SPARQL queries http://twarql.org:8890/sparql.

What have I learned

- What is LINKED OPEN DATA (LOD).

- What is DBPedia?.

- Different Ontologies.

- What is RDF? How to create a Triple? Finally How to store them into a Triple Store?

- What is SPARQL Query Language? How to write SPARQL Queries?

Graph Visualization

Work that has been done:

- Find a good triple store visualization library: did much searching the internet for well-written clean and good-looking graph visualization libraries that had the ability to display entities with names, lines representing relationships between those entities and varying size of entities based on frequency. The best one I found is called JavaScript InfoVis Toolkit [1]. It's a very well rounded visualization library with a lot of options for all kinds of graphs. The graph style to best fit this project was the "force directed" graph. I partially implemented the project stubbing out the pull method that gets the data from the rdf database. The graph uses JSON for its data and JQuery to display it's graph.

- Put together a demo html page with linked jquery library, linked "pull.php" file and linked graph visualization library.

- After showing the demo to Pavan, it was decided that the graph looked too plain, so some more styling was done to the test page, not only in css, but inside the javascript and query graph code as we'll, for instance, the lines between the entities were made thicker, a loading bar to show the graph loading was implemented and code was changed to make the entities appear closer on the graph.

- Implement a function that gets JSON from pull.php and into javascript form so that graph.js can get data to graph. I'm used this online tutorial [2] to learn how to get JSON from php to javascript

- Implement a pull.php file that accesses dbpedia directly to send queries and retrieve JSON using GET and POST. modified and extended code from this blog [3] to do this.

- pull.php file also converts formats of JSON from the dbpedia ontologies etc into something that the graph can understand like "entity", "relationship" etc.

What I Learned

- How to write and edit javascript, how to include external javascript files in a website

- How to write and modify jQuery, how to include it on a web project.

- What Json is, how to it works, how it gets transfered across multiple languages such as javascript and php.

- Gained experience in rdf, sqarql, triple-based databases and just databases in general, how to query etc.

- How arrays work in php, how they can be transfered to and from json

- How to work with, communicate with and collaborate with a team of developers and programmers to complete a project

Use Case

Some of the use cases for this application will be the use of real-time querying of Semantic Social Stream

Use Case -- TWITRIS/IAC (INDIA AGAINST CORRUPTION)

Data that we worked on

We worked on the tweets collected for India Against Corruption. Basically we used the twitris database for this

Here are some statistics

- Total number of tweets (microposts) -- 116001

- Number of entities in tweets -- 85834

Data that we created

- Number of tweets that have at least one entity -- 64691

- Number of tweets that have more than one entity -- 21143

- Number of persons mentioned in all these tweets (of the total 16197) -- 363

- Number of places mentioned in all these tweets (of the total 6030) -- 479

The Big Thing

- We have created about 1262627 (1.26 Million) Triples.

Published Data

We have successfully published all the data over the web http://twarql.org/resource/page/post/126824738495545344

SOME SPARQL QUERIES

SPARQL queries we have used.

- Most Spoken About Places

select ?place, COUNT(?place) AS ?placecount where {

?tweet <http://moat-project.org/ns#taggedWith> ?place .

?place a <http://dbpedia.org/ontology/Place> .

} GROUP BY ?place ORDER BY DESC(?placecount )

- Give the names of the politicians in the tweet, with sentiment positive

select ?person, COUNT(?person) AS ?personcount where {

?tweet <http://moat-project.org/ns#taggedWith> ?person .

?person a <http://dbpedia.org/ontology/Person> .

?tweet <http://twarql.org/resource/property/sentiment> <http://twarql.org/resource/property/Positive> .

} GROUP BY ?person ORDER BY DESC(?personcount )

- I want to know the person who is both a politician and an engineer, who is being mentioned in this event

select ?person, COUNT(?person) AS ?personcount where {

?tweet <http://moat-project.org/ns#taggedWith> ?person .

?person a <http://dbpedia.org/ontology/Person> .

?person <http://dbpedia.org/property/profession> <http://dbpedia.org/resource/Politician> .

?person <http://dbpedia.org/property/profession> <http://dbpedia.org/resource/Engineer> .

} GROUP BY ?person ORDER BY DESC(?personcount )

- Person and his profession

select DISTINCT ?person ?profession where {

?tweet <http://moat-project.org/ns#taggedWith> ?place .

?person a <http://dbpedia.org/ontology/Person> .

?person <http://dbpedia.org/property/profession> ?profession .

}

- Politicians spoken about in a Place

select ?place ?person count(?person) AS ?personcount where {

?tweet <http://moat-project.org/ns#taggedWith> ?place .

?tweet <http://moat-project.org/ns#taggedWith> ?person .

?place a <http://dbpedia.org/ontology/Place> .

?person a <http://dbpedia.org/ontology/Person> .

?person <http://dbpedia.org/property/profession> <http://dbpedia.org/resource/Politician> .

} GROUP BY ?place ?person ORDER BY DESC(?personcount)

PROJECT DEMO LINKS

- http://twitris.knoesis.org/iac (Search&Explore tab).

- Here we are using SPARQL queries to fetch desired information from the LOD that we have published (only the backend).

- Graph Visualisation: http://www.kiddiescissors.com/twitvis

References

- Milan Stankovic, Matthew Rowe, Philippe Laublet -- Mapping Tweets to Conference Talks: A Goldmine for Semantics

- Pablo N. Mendes, Alexandre Passant, Pavan Kapanipathi and Amit P. Sheth -- Linked Open Social Signals

- Pablo N.Mendes, Pavan Kapanipathi, Alexandre Passant -- Twarql: Tapping into the Wisdom of the Crowd

- Amit Sheth,Hemant Purohit,Ashutosh Jadhav,Pavan Kapanipathi,Lu Chen -- Understanding Events Through Analysis Of Social Media

Work Schedule

Pramod

- Difference of the metadata being collected now and what you have found

- Schema for the tweets

- Event based schema for the tweets

- Code to count the most frequent DBPedia entities.

- Realtime modification of the count in RDF at the triple store

Dylan

- Find a visualization library

- Intergrate it

- Modify it to projects specific needs

- Implement pull function to fill it with data

Presentation

Team

- Pavan Kapanipathi -- pavan@knoesis.org

- Pramod Koneru -- koneru@knoesis.org

- Dylan Williams -- dylan@kiddiescissors.com