Property Alignment

Property Alignment on Linked Datasets

Property alignment in Linked Open Data (LOD) or linked datasets is a non-trivial task because of the complex data representations. Concept (class) and instance level alignment possibilities have been investigated in the recent past but property alignment has not received much attention yet. Therefore, we propose an approach that can handle complex data representations and also achieve higher correct matching ratio. Our approach is based on utilizing fundamental building block of the interlinked datasets (e.g., LOD) which is known as Entity Co-Reference (ECR) links. We try to match property extensions to come up with a measurement to approximate owl:equivalent property. We use ECR links to findout equivalent instances for a particular property extension and then accumulate the matching number of extensions to decide on a matching property pair between two datasets.

Approach

In this initial experiment, we explored property extension matching using owl:sameAs and skos:exactMatch interlinking relationships (as ECR links). We will explore other less restrictive links as skos:closeMatch and some links like rdf:seeAlso links used in certain datasets for their requirements later and check the performance.

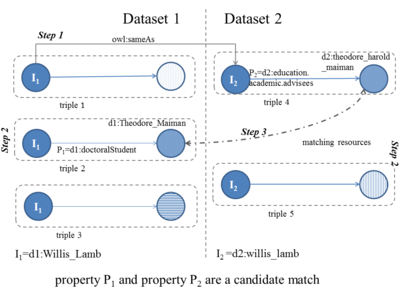

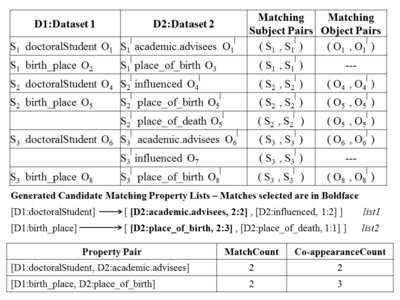

Figure 1 shows how the matching process work in our extension based algorithm. Each property pair is matched separately of others by extensions by analyzing each instance associated with that property (in the extensions slots). The algorithm needs to process subject instances from starting dataset and it extracts triples from each subject instance and finds out the relevant subject instance in the second dataset by traversing through an ECR link. Then object values for the property pair is matched using ECR links again. The final result of this matching process can be illustrated by an example presented in Figure 2. We keep track of statistical measures for deciding the final matching pairs as described in the paper (to appear in isemantics 2013) as MatchCount and Co-appearanceCount as described in Figure 2. These measures help to reduce incorrect mappings such as "birth_place" and "place_of_birth".

Experiment and Datasets

To evaluate our approach in the linked datasets, we have used sample datasets from Linked Open Data (LOD). The datasets we used are DBpedia, Freebase, LinkedMDB, DBLP L3S and DBLP RKB_Explorer datasets. Dbpedia and Freebase are multi domain major hubs in LOD connecting other datasets together. LinkedMDB is a specialized dataset in the movie domain and DBLP L3S and DBLP RKB_Explorer datasets are specialized datasets for scientific publications. Therefore, in our evaluation, we are covering all types of datasets and their alignments. DBpedia and Freebase alignment presents multi domain alignment whereas DBpedia and LinedMDB alignment shows mutli domain and specific domain dataset alignment. The two DBLP dataset allignment represents specific domain to specific domain alignment task.

For this experiment, we selected person, film and software domains between DBpedia and Freebase datasets because these domains have more complex data representations and variations. Films in LinkedMDB are aligned with films in DBpedia. Scientific articles in DBLP datasets are aligned together. Each of the five experiments had 5000 instances analyzed to come up with the alignment decisions.

The results achieved are presented below using precision and recall.

| Measure | DBpedia-Freebase (Person) | DBpedia-Freebase (Film) | DBpedia-Freebase (Software) | DBpedia-LinkedMDB (Film) | DBLP_RKB-DBLP_L3S (Articles) | Average | |

| Our Algorithm | Precision | 0.8758 | 0.9737 | 0.6478 | 0.7560 | 1.0000 | 0.8427 |

| Recall | 0.8089 | 0.5138 | 0.4339 | 0.8157 | 1.0000 | 0.7145 | |

| F measure | 0.8410 | 0.6727 | 0.5197 | 0.7848 | 1.0000 | 0.7656 | |

| Dice Similarity | Precision | 0.8064 | 0.9666 | 0.7659 | 1.0000 | 0.0000 | 0.7078 |

| Recall | 0.4777 | 0.4027 | 0.3396 | 0.3421 | 0.0000 | 0.3124 | |

| F measure | 0.6000 | 0.5686 | 0.4705 | 0.5098 | 0.0000 | 0.4298 | |

| Jaro Similarity | Precision | 0.6774 | 0.8809 | 0.7755 | 0.9411 | 0.0000 | 0.6550 |

| Recall | 0.5350 | 0.5138 | 0.3584 | 0.4210 | 0.0000 | 0.3625 | |

| F measure | 0.5978 | 0.6491 | 0.4903 | 0.5818 | 0.0000 | 0.4638 | |

| WordNet Similarity | Precision | 0.5200 | 0.8620 | 0.7619 | 0.8823 | 1.0000 | 0.8052 |

| Recall | 0.4140 | 0.3472 | 0.3018 | 0.3947 | 0.3333 | 0.3582 | |

| F measure | 0.4609 | 0.4950 | 0.4324 | 0.5454 | 0.5000 | 0.4867 |