Difference between revisions of "Entity Summary"

(→Dataset) |

|||

| Line 5: | Line 5: | ||

| − | = | + | == Preliminaries == |

'''Problem Statement''' - An entity is usually described using a conceptually different set of facts to improve coverage. We want to select a ‘representative’ subset of this set in a good summary to uniquely identify the entity. | '''Problem Statement''' - An entity is usually described using a conceptually different set of facts to improve coverage. We want to select a ‘representative’ subset of this set in a good summary to uniquely identify the entity. | ||

| Line 18: | Line 18: | ||

'''Definition 4''' : Given FS(e) and a positive integer k < |FS(e)|, summary of entity e is Summ(e) ⊂ FS(e) such that |Summ(e)| = k. | '''Definition 4''' : Given FS(e) and a positive integer k < |FS(e)|, summary of entity e is Summ(e) ⊂ FS(e) such that |Summ(e)| = k. | ||

| + | <!-- | ||

== Faceted entity summaries == | == Faceted entity summaries == | ||

An entity is described by a feature set. A feature (f ) is basically characterized by the property (P rop(f )) and value (V al(f )). In fact, a property binds a specific meaning to an entity using a value. We observe in general that different properties represent different aspects of an entity. For example, profession and spouse properties of an entity (of type person) represent two different aspects. The first defines an intangible value and the second defines a human; one talking about the entity’s professional life and the other about its social life. Based on this observation, we can formalize facets for a feature set. | An entity is described by a feature set. A feature (f ) is basically characterized by the property (P rop(f )) and value (V al(f )). In fact, a property binds a specific meaning to an entity using a value. We observe in general that different properties represent different aspects of an entity. For example, profession and spouse properties of an entity (of type person) represent two different aspects. The first defines an intangible value and the second defines a human; one talking about the entity’s professional life and the other about its social life. Based on this observation, we can formalize facets for a feature set. | ||

| Line 28: | Line 29: | ||

Note that if the number of facets is n and the size of the summary is k, at least one feature from each facet is included in the summary if k > n. If k < n, then at most one feature from each facet is included in the summary. | Note that if the number of facets is n and the size of the summary is k, at least one feature from each facet is included in the summary if k > n. If k < n, then at most one feature from each facet is included in the summary. | ||

| + | --> | ||

| − | + | == Clustering == | |

| − | = | + | The FACES approach generates faceted entity summaries that are both concise and comprehensive. Conciseness is about selecting a small number of facts. Comprehensiveness is about selecting facts to represent all aspects of an entity that improves coverage. Diversity is about selecting facts that are orthogonal to each other so that the selected few facts enrich coverage. Hence, diversity improves comprehensiveness when the number of features to include in a summary is limited. Conciseness may be achieved by following various ranking and filtering techniques. But creating summaries that satisfy both conciseness and comprehensiveness constraints simultaneously is not a trivial task. It needs to recognize facets of an entity that features represent so that the summary can represent as many facets (diverse and comprehensive) as possible without redundancy (leads to conciseness). Number and nature of clusters (corresponding to abstract concepts) in a feature set is not known a priori for an entity and is hard to guess without human intervention or explicit knowledge. Therefore, a supervised clustering algorithm or unsupervised clustering algorithm with prescribed number of clusters to seek cannot be used in this context. To achieve this objective, we have adapted a flexible unsupervised clustering algorithm based on Cobweb [2][3] and have designed a ranking algorithm for feature selection. |

| − | The FACES approach generates faceted entity summaries that are both concise and comprehensive. Conciseness is about selecting a small number of facts. Comprehensiveness is about selecting facts to represent all aspects of an entity that improves coverage. Diversity is about selecting facts that are orthogonal to each other so that the selected few facts enrich coverage. Hence, diversity improves comprehensiveness when the number of features to include in a summary is limited. Conciseness may be achieved by following various ranking and filtering techniques. But creating summaries that satisfy both conciseness and comprehensiveness constraints simultaneously is not a trivial task. It needs to recognize facets of an entity that features represent so that the summary can represent as many facets (diverse and comprehensive) as possible without redundancy (leads to conciseness). Number and nature of clusters (corresponding to abstract concepts) in a feature set is not known a priori for an entity and is hard to guess without human intervention or explicit knowledge. Therefore, a supervised clustering algorithm or unsupervised clustering algorithm with prescribed number of clusters to seek cannot be used in this context. To achieve this | + | |

| − | objective, we have adapted a flexible unsupervised clustering algorithm based on Cobweb [2][3] and have designed a ranking algorithm for feature selection. | + | |

== Hierarchical conceptual clustering == | == Hierarchical conceptual clustering == | ||

| Line 50: | Line 50: | ||

| + | <!-- | ||

== Modifying Cobweb for entities == | == Modifying Cobweb for entities == | ||

| Line 67: | Line 68: | ||

[[image:es_rank.png|thumb|none|400px]] | [[image:es_rank.png|thumb|none|400px]] | ||

| + | |||

| + | --> | ||

= Evaluation = | = Evaluation = | ||

| Line 179: | Line 182: | ||

| − | === Experiment | + | === Experiment 3 === |

In this experiment, we asked 69 users to vote for the best summary that helps them to identiy the entity. In the first usecase, we used FACES and RELIN side by side. Then we used all three systems side by side and results are shown in table 4. Users were not given information of the systems that produced summaries. | In this experiment, we asked 69 users to vote for the best summary that helps them to identiy the entity. In the first usecase, we used FACES and RELIN side by side. Then we used all three systems side by side and results are shown in table 4. Users were not given information of the systems that produced summaries. | ||

Revision as of 16:54, 16 May 2014

Check back on Friday May 16th 2014 for more details...

Contents

Creating Faceted (divesified) Entity Summaries

Creating entity summaries has been of contemporary interest in the Semantic Web community in the recet past. In our approach called FACES: FACed Entity Summaries, we are interested in generating diversified and user friendly summaries.

Preliminaries

Problem Statement - An entity is usually described using a conceptually different set of facts to improve coverage. We want to select a ‘representative’ subset of this set in a good summary to uniquely identify the entity.

Definitions 1-4 defines basic notions related to entity summaries. They are as stated in [1].

Definition 1 : A data graph is a digraph G = V, A, LblV , LblA , where (i) V is a finite set of nodes, (ii) A is a finite set of directed edges where each a ∈ A has a source node Src(a) ∈ V, a target node Tgt(a) ∈ V, (iii) LlbV : V → E ∪ L and (iv) LblA : A → P are labeling functions that map nodes to entities or literals and edges to properties.

Defintion 2 : A feature f is a property-value pair where Prop(f ) ∈ P and Val(f ) ∈ E ∪ L denote the property and the value, respectively. An entity e has a feature f in a data graph G = V, A, LblV , LblA if there exists a ∈ A such that LblA (a) = Prop(f ), LblV (Src(a)) = e and LblV (Tgt(a)) = Val(f ).

Definition 3 : Given a data graph G, the feature set of an entity e, denoted by FS(e), is the set of all features of e that can be found in G.

Definition 4 : Given FS(e) and a positive integer k < |FS(e)|, summary of entity e is Summ(e) ⊂ FS(e) such that |Summ(e)| = k.

Clustering

The FACES approach generates faceted entity summaries that are both concise and comprehensive. Conciseness is about selecting a small number of facts. Comprehensiveness is about selecting facts to represent all aspects of an entity that improves coverage. Diversity is about selecting facts that are orthogonal to each other so that the selected few facts enrich coverage. Hence, diversity improves comprehensiveness when the number of features to include in a summary is limited. Conciseness may be achieved by following various ranking and filtering techniques. But creating summaries that satisfy both conciseness and comprehensiveness constraints simultaneously is not a trivial task. It needs to recognize facets of an entity that features represent so that the summary can represent as many facets (diverse and comprehensive) as possible without redundancy (leads to conciseness). Number and nature of clusters (corresponding to abstract concepts) in a feature set is not known a priori for an entity and is hard to guess without human intervention or explicit knowledge. Therefore, a supervised clustering algorithm or unsupervised clustering algorithm with prescribed number of clusters to seek cannot be used in this context. To achieve this objective, we have adapted a flexible unsupervised clustering algorithm based on Cobweb [2][3] and have designed a ranking algorithm for feature selection.

Hierarchical conceptual clustering

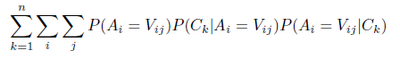

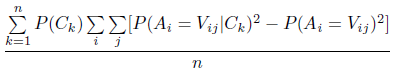

We use Cobweb [2] algorithm as the hierarchical conceptual clustering algorithm in our problem. " Cobweb is an incremental system for hierarchical clustering. the system carries out a hill-climbing search through a space of hierarchical classification schemes using operator that enable bidirectional travel through this space." Cobweb uses a heauristic measure called category utility to guide search. Category utility is a tradeoff between intra-class similarity and inter-class dissimilarity of objects (attribute-value pairs). Intra-class similarity is the conditional probability of the form P(Ai = Vij| Ck), where Ai = Vij is an attribute-value pair and Ck is a class. When this probability is larger, more memebrs from the class share more values. Inter-class similarity is the conditional probability P(Ck| Ai = Vij). When this probability is larger, fewer objects sharing similar values are in other classes. Therefore, categori utility (CU) is defined as the product of intra-class and inter-class similarities. For a partition {C1, C2,...., Cn}, CU is defined as follows,

Finally, they define CU as the gaining function as below.

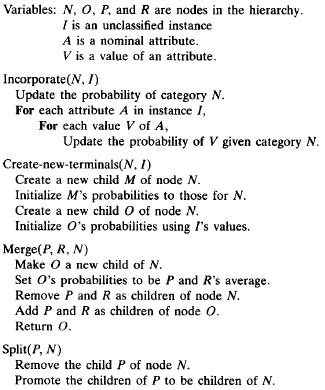

Cobweb algorithm is explained in Figure 1 and its four operators are explained in Figure 2 (taken from [3]).

Evaluation

For this evaluation we evaluate FACES against RELIN and SUMMARUM. We chose DBpedia as the dataset as it was used in RELIN and is a huge dataset containng multi-domain entities. We extracted 50 entities randomly from English DBpedia version 3.9. We asked 15 human judges to create length 5 and 10 entity summaries (ideal summaries) and used them as the gold standard. We also made sure that each entity gets at least 7 ideal summaries. We could not use experiment data of RELIN as authors of RELIN confirmed that the data are not available. We avoid processing properties such as owl:sameAs, rdf:type, db:wordnet_type. db:wikiPageWikiLink, db:wikiPageExternalLink, db:wikiPageUsesTemplate, db:wikiPageRevisionID, db:wikiPageID, dc:subject, and db:Template. In the sample dataset, there are at least 17 ditinct properties and 19 - 88 distinct features per entity.

Experiment 1

Results of our evaluation with the Gold standard are listed in Table 1. It shows that FACES clearly outperforms other systems. RELINM is a modification of RELIN to have the capability to pick filter duplicate properties in the summary. Table 2 shows that our change of search service to RELIN does not affect the final outcome. We tested this using randomly selected entities of size 5.

| System | k = 5 | FACES % ↑ | k = 10 | FACES % ↑ | time/entity in seconds |

|---|---|---|---|---|---|

| FACES | 1.4314 | NA | 4.3350 | NA | 0.76 sec. |

| RELIN | 0.4981 | 187 % | 2.5188 | 72 % | 10.96 sec. |

| RELINM | 0.6008 | 138 % | 3.0906 | 40 % | 11.08 sec. |

| SUMMARUM | 1.2249 | 17 % | 3.4207 | 27 % | NA |

| Ideal summ agreement | 1.9168 | 4.6415 |

| k = 5 | k = 10 | ||

|---|---|---|---|

| Google search API | Sindice seach API | Google search API | Sindice search API |

| 3.5 | 3.4 | 0.5333 | 0.5428 |

Experiment 2

This experiment measures FACES ability to identify facets. In other words, its ability to identify conceptually similar groups in comparison to ideal summaries. For this purpose we just ealuate property name overlap between computer generated summaries and ideal summaries. The reults are shown in Table 3.

| System | k = 5 | FACES %↑ | k = 10 | FACES %↑ |

|---|---|---|---|---|

| FACES | 1.8649 | NA | 5.6931 | NA |

| RELIN | 0.7339 | 154 % | 3.3993 | 69 % |

| RELINM | 0.8695 | 114 % | 4.1551 | 37 % |

| SUMMARUM | 1.6484 | 13 % | 4.4919 | 27 % |

| Ideal summ agreement | 2.3194 | 5.6228 |

Experiment 3

In this experiment, we asked 69 users to vote for the best summary that helps them to identiy the entity. In the first usecase, we used FACES and RELIN side by side. Then we used all three systems side by side and results are shown in table 4. Users were not given information of the systems that produced summaries.

| Experiment | FACES % | RELINM % | SUMMARUM % |

|---|---|---|---|

| Experiment 1 | 84 % | 16 % | NA |

| Experiment 2 | 54 % | 16 % | 30 % |

Dataset

Evauation data is available for download

References

[1] Cheng, Gong, Thanh Tran, and Yuzhong Qu. "RELIN: relatedness and informativeness-based centrality for entity summarization." In The Semantic Web–ISWC 2011, pp. 114-129. Springer Berlin Heidelberg, 2011.

[2] Fisher, D.H.: Knowledge acquisition via incremental conceptual clustering. Machine learning 2(2), 139–172 (1987)

[3] Gennari, J.H., Langley, P., Fisher, D.: Models of incremental concept formation. Artificial intelligence 40(1), 11–61 (1989)