Entity Identification and Extraction related to a Specific Event

Mariya Quibtiya

Final Project Report for Web Information Systems Class [CS475/675], Fall 2011

Instructor: Amit Sheth [1]

GTA: Ajith Ranabahu [2]

Introduction

This work on Entity Identification and Classification focuses primarily on the identification of Proper names relevant to a specific event in a collection of tweets from Twitter, and then classifying them into a set of pre-defined categories of interest such as:

- Person names (names of people)

- Organization names (companies, government organizations, committees, etc.)

- Location names (cities, countries etc.)

- Miscellaneous names (such as, a specific date – 9/11, a facility – such as “Lokpal Bill”, time, monetary expressions, etc.)

Since the advent of social media, we can find greater and greater involvement of people on various events happening all across the world. In the process, people talk about a number of entities related to a specific event on social media; and analysts want to have a deeper insight with respect to ‘what’ people are talking and ‘about whom’ they are talking. In order to know about the prominent entities in a particular event and the polarity of sentiments of people associated with those entities, with the future aim of knowing the influential entities related to a particular event - Identification and Classification of entities should be done in a very precise manner, and hence, in an unstructured context of tweets, the task of “Automated and Relevant Entity Extraction” becomes a challenging yet desirable task.

Though the use of automated entity extraction methods leads to the spotting of many spurious entities which are quite irrelevant to the context or the specific event, yet it helps in the real-time evaluation of events. A new, but very prominent entity may emerge at any point of time and need to be recognized, especially on social media which might influence many people or some very critical issues. Thus, the importance of automated entity extraction cannot be overlooked.

The corpus may contain the mentions of many entities or nouns, but some of the entities mentioned in the tweets may not always relate to the event that we are focusing upon. For example, “Anna Hazare” mentioned in the tweets would relate to the event “India against Corruption” , but “Anna Calvi” or “Anna Wintour” or “Anna Fischer” are not related to the same. So, apart from identifying the proper names in a given corpus, it is also important to disambiguate the entities with respect to their relevance with a particular event. That is, we need to chop-off entities like “Anna Calvi” if we are talking about “India against Corruption”. Moreover since we are working upon unstructured corpus, it is not necessary that people always follow a structured pattern , like using Capital letters or writing names accurately or using abbreviated or first/last names only. So, we also need to capture the fact that “Anna”, “AnnaHazare”, “annahazare”, ”hazare” all relate to the same entity “Anna Hazare”.

We begin with a collection of some tweets on a particular event. Then, using the approaches described below, we would keep on enhancing our candidate sets unless we arrive at some concrete list of entities relevant to the context without redundancy and spurious names. Firstly, we try to capture the names of all the entities mentioned in the corpus, and then gradually improve the accuracy to get the desired set of topmost entities that people are interested in.

Approaches

I use LinkedGeoData [3] as the background knowledge for location entity spotting. LinkedGeoData uses the information collected by the OpenStreetMap project and makes it available as an RDF knowledge base according to the Linked Data principles. I use the RelevantNodes dataset which contains 66 million triples. LinkedGeoData provides a Sparql endpoints for online access. I choose to download the data set and distribute it on our own Virtuoso server. We now can access the data from our own Sparql endpoint [4].

Based on the linguistic heuristic (e.g., the names of places are usually capitalized in the text, etc.) and knowledge from LinkedGeoData, the toponyms (place names) can be identified. The identified toponyms can be ambiguous. For example, “Washington” could be a name of person or a name of state. This type of ambiguity is considered as geo/non-geo. In this work, I focus on deal with another type of ambiguity, namely geo/geo, i.e., one toponym could refer to different geo locations. For each of the extracted toponym, I obtain the possible geo locations it refers from LinkedGeoData as candidates. The disambiguation algorithm aims at assigning one geo location from the candidates to the toponym.

Four different types of context in social media (e.g., Twitter, etc) can be used for the disambiguation: (1) the other place names in the same piece of text, namely local context, (2) the place names in other piece of text generated by the same user, (3) the geo-tag attached with the text, and (4) user location in the profile. Following the similar way described above, the toponyms can be extracted from each type of the context. I use them separately to investigate their performances. As I discussed earlier in the introduction, there are different approaches for disambiguation, based on prior knowledge (without context), relations, and distances. I apply them in the following steps with each type of context. Firstly, for all the toponyms (the target and the context), I obtain the candidate geo locations with their information on type, population, is_in, latitude and longitude from LinkedGeoData. Secondly, narrow down the search space according to the is_in relations. If there are is_in relations between some candidates of the target and some candidates of the context (e.g., “Dayton” and “Ohio”), or some candidates of the target and some candidates of the context have the same is_in relations (e.g., “Dayton” and “Columbus”), then only remain them and remove other candidates. Thirdly, for each candidate of the target toponym, calculate the minimum average of the pair-wise distances from it to the candidates of all the topomyms as context, and select the one that minimizes the minimum average distances. Here I set a threshold (500 miles) for the minimum distance, since it does not make much sense if two locations are too far away from each other. If after the three steps there is no solution obtained, apply the type and population information to make the choice, for example, locations with type as city, or with bigger populations.

Experiments

I collect data from Twitter for experiments. The data are collected as follows using Twitter Streaming API: (1) as the first stage, use the most common place names in the U.S. (according to the Wikipedia page [5]) as keywords to query Twitter, (2) start the second stage when collected 5000 user ids from the first stage. Track the 5000 users, obtain their profile and tweets created. In one week period, totally collected 2,187,205 tweets, among which, 7.12% (155,705) tweets mention place names, 1.97% (42,988) tweets have geo-tags, and 57.36% (2,868) users provide location information (might be invalid).

As a preliminary evaluation, a small testing set of 100 tweets manually labeled as gold standard. Each tweet in the gold standard mentions at least one place name and has at least one type of context available. To assign a geo-location to the place name in each tweet, the annotator has to take all available context information into consideration. To be disambiguous, the geo-location is represented as the node in LinkedGeoData. Run the disambiguation algorithm on the testing set with each type of context separately. The result is concluded in the following Figures.

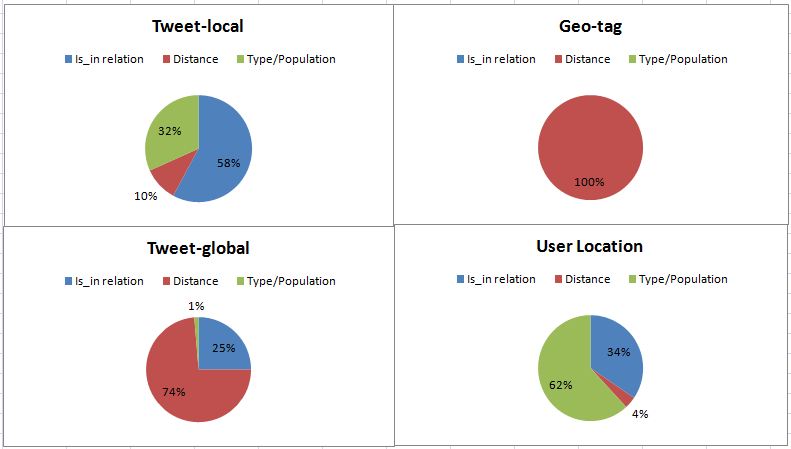

Figure 1 shows the percentage of tweets disambiguated by different methods with each type of context. With the local context (place names in the same tweet), more than half of the toponyms were disambiguated by the is_in relations, which suggests the is_in relations highly possible exist among the place names in the same tweet. For the global context (place names mentioned in different tweets generated by the same user) and geo-tags with the tweets, the distance turns to be the one that contributes most to the disambiguation. It is not difficult to understand. Comparing with the local context, other context information tends to be at the same layer in the hierarchy with the target (e.g., different cities, but not a city and a state). It is surprised to find that the majority of toponyms were disambiguated by prior knowledge (i.e., type and population) but not using the user location in the last case. It might suggest the low quality of user location information, or users discussed less about what happened around them but more about others.

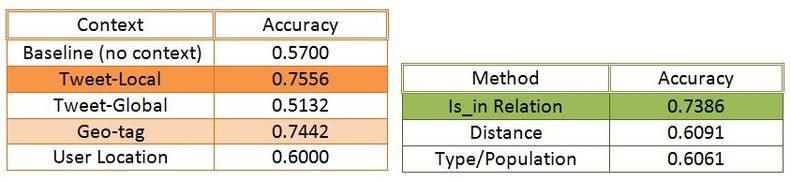

Observing from figure 2, which shows the accuracy of the result obtained with different context and methods. As the baseline, I applied only prior knowledge about type and population to resolve the toponym without any context information. The accuracy is 57% with the baseline. It is again surprised to find that the baseline achieved better performance than using the global context (accuracy 51.32%). Seems global context is not good disambiguator. The best performance is achieved by using the local context as 75.56%, and it is only 1.14% better than using the geo-tags. It makes a lot sense. Intuitively, the place names mentioned adjacent to each other in the text are highly possible relevant to each other. And the place names mentioned in the tweets are highly possible the place where the tweets are sent from. From the method perspective, is_in relations step out as the most convincing way for disambiguation.

Conclusion and Future Work

In this project, I explored the problem of toponym resolution (location disambiguation) in social media, and investigated the effectiveness of different context and different methods in the disambiguation task. There are several interesting findings that could be helpful for my future work. According to the experiments, place names mentioned in the same piece of text and geo-tags with the text turn to be the most useful context, and is_in relations is found to be the most effective way to disambiguate the in-text place names. No matter which kind of context and which method is used, the background knowledge is the foundation of disambiguation. I am currently working on event-centric opinion summarization, and I will further investigate how locations can be helpful for this task.

References

- S.Kinsella, V.Murdock, and N.O'Hare. "I'm Eating a Sandwich in Glasgow": Modeling Locations with Tweets. In Proc. of the 3rd Workshop on Search and Mining User-generated Contents, Glasgow, UK,2011.

- Daniel Gruhl,Meenakshi Nagarajan,Jan Pieper,Christine Robson,Amit Sheth, '"Context and Domain Knowledge Enhanced Entity Spotting in Informal Text"', ISWC 2009.

- Wenbo Zong, Dan Wu, Aixin Sun, Ee-Peng Lim, and Dion Hoe-Lian Goh. 2005. On assigning place names to geography related web pages. In Proceedings of the 5th ACM/IEEE-CS joint conference on Digital libraries (JCDL '05). ACM, New York, NY, 2005.

- Zhu Zhu, Lidan Shou, Kuang Mao, and Gang Chen. 2011. Location disambiguation for geo-tagged images. In Proceedings of the 34th international ACM SIGIR conference on Research and development in Information (SIGIR '11). ACM, New York, NY, USA, 2011.