Difference between revisions of "Entity Identification and Extraction related to a Specific Event"

(→Approaches) |

|||

| Line 35: | Line 35: | ||

<span style="font-size:11.5pt">We used two approaches for getting the candidate set of entities from the tweet corpus:<br/><br/> | <span style="font-size:11.5pt">We used two approaches for getting the candidate set of entities from the tweet corpus:<br/><br/> | ||

| − | <p style="font-size: | + | <p style="font-size:12pt"> |

'''i) Querying the DBPedia with extracted noun phrases:''' | '''i) Querying the DBPedia with extracted noun phrases:''' | ||

| Line 42: | Line 42: | ||

<br/> | <br/> | ||

| − | <p style="font-size: | + | <p style="font-size:12pt"> |

'''ii) Using Alchemy API- based approach:''' | '''ii) Using Alchemy API- based approach:''' | ||

Since, DBPedia does not give a high precision result, we followed another approach of using the Alchemy API. Since, all these API-based approaches always have some upper limit on the number of hits per day that we can query, we dumped the entire corpus in a text file after the pre-processing step and provided the entire text file as an input to the Alchemy API. This not only reduces the number of hits as compared to providing a single tweet at a time, but also enhances the results by providing some context support. We considered four parameters in the results obtained – name/text that identifies the entity, its type, its frequency in the corpus and its relevance score. Though it could figure out entities very precisely from the corpus, but some results were still to be filtered and needed some improvement. Firstly, it included all the names such as “Anna Wintour”, “Anna Calvi” with a comparatively high relevance score of more than 0.49 on a 0-1 scale; even if it was not relevant to the specified event “India against Corruption”. Secondly, it also considered “Jan Lokpal” and “Jan Lokpal Bill” as two different entities. Thirdly, it was not able to determine the type of few entities correctly, such as it associated the type “Person” to the entity “Jan Lokpal Bill”, which should be put under the type “Facility”.</p> | Since, DBPedia does not give a high precision result, we followed another approach of using the Alchemy API. Since, all these API-based approaches always have some upper limit on the number of hits per day that we can query, we dumped the entire corpus in a text file after the pre-processing step and provided the entire text file as an input to the Alchemy API. This not only reduces the number of hits as compared to providing a single tweet at a time, but also enhances the results by providing some context support. We considered four parameters in the results obtained – name/text that identifies the entity, its type, its frequency in the corpus and its relevance score. Though it could figure out entities very precisely from the corpus, but some results were still to be filtered and needed some improvement. Firstly, it included all the names such as “Anna Wintour”, “Anna Calvi” with a comparatively high relevance score of more than 0.49 on a 0-1 scale; even if it was not relevant to the specified event “India against Corruption”. Secondly, it also considered “Jan Lokpal” and “Jan Lokpal Bill” as two different entities. Thirdly, it was not able to determine the type of few entities correctly, such as it associated the type “Person” to the entity “Jan Lokpal Bill”, which should be put under the type “Facility”.</p> | ||

Revision as of 22:06, 30 November 2011

Mariya Quibtiya

Final Project Report for Web Information Systems Class [CS475/675], Fall 2011

Instructor: Amit Sheth [1]

GTA: Ajith Ranabahu [2]

Introduction

This work on Entity Identification and Classification focuses primarily on the identification of Proper names relevant to a specific event in a collection of tweets from Twitter, and then classifying them into a set of pre-defined categories of interest such as:

- Person names (names of people)

- Organization names (companies, government organizations, committees, etc.)

- Location names (cities, countries etc.)

- Miscellaneous names (such as, a specific date – 9/11, a facility – such as “Lokpal Bill”, time, monetary expressions, etc.)

Since the advent of social media, we can find greater and greater involvement of people on various events happening all across the world. In the process, people talk about a number of entities related to a specific event on social media; and analysts want to have a deeper insight with respect to ‘what’ people are talking and ‘about whom’ they are talking. In order to know about the prominent entities in a particular event and the polarity of sentiments of people associated with those entities, with the future aim of knowing the influential entities related to a particular event - Identification and Classification of entities should be done in a very precise manner, and hence, in an unstructured context of tweets, the task of “Automated and Relevant Entity Extraction” becomes a challenging yet desirable task.

Though the use of automated entity extraction methods leads to the spotting of many spurious entities which are quite irrelevant to the context or the specific event, yet it helps in the real-time evaluation of events. A new, but very prominent entity may emerge at any point of time and need to be recognized, especially on social media which might influence many people or some very critical issues. Thus, the importance of automated entity extraction cannot be overlooked.

The corpus may contain the mentions of many entities or nouns, but some of the entities mentioned in the tweets may not always relate to the event that we are focusing upon. For example, “Anna Hazare” mentioned in the tweets would relate to the event “India against Corruption” , but “Anna Calvi” or “Anna Wintour” or “Anna Fischer” are not related to the same. So, apart from identifying the proper names in a given corpus, it is also important to disambiguate the entities with respect to their relevance with a particular event. That is, we need to chop-off entities like “Anna Calvi” if we are talking about “India against Corruption”. Moreover since we are working upon unstructured corpus, it is not necessary that people always follow a structured pattern , like using Capital letters or writing names accurately or using abbreviated or first/last names only. So, we also need to capture the fact that “Anna”, “AnnaHazare”, “annahazare”, ”hazare” all relate to the same entity “Anna Hazare”.

We begin with a collection of some tweets on a particular event. Then, using the approaches described below, we would keep on enhancing our candidate sets unless we arrive at some concrete list of entities relevant to the context without redundancy and spurious names. Firstly, we try to capture the names of all the entities mentioned in the corpus, and then gradually improve the accuracy to get the desired set of topmost entities that people are interested in.

Approaches

We used two approaches for getting the candidate set of entities from the tweet corpus:

i) Querying the DBPedia with extracted noun phrases: We randomly selected 1000 tweets per event for two events, namely “India against Corruption” and “Royal Wedding”. We then pre-processed the tweets to remove some noise, like making the input free from hash tags, mentions and url’s. Then, we used the sentence splitter to split every tweet into the constituting sentences and then used the Stanford Parser to parse the sentences with different parts of speech tags, and finally collected all the noun-phrases occurring in the entire corpus. But these extracted noun-phrases also contained many undesirable words like complaints, tricks, growth and similar abstract nouns. In order to remove these, we used a short dictionary containing a list of commonly used abstract nouns. Apart from using the dictionary, we also followed an additional linguistic heuristic approach for removing such words – if a noun phrase begins more that 80% times with a small letter, it is less probable of being a proper noun and therefore, less probable of being an entity. Using the above two approaches, we were able to remove most of the undesirable abstract words from our candidate sets. Next, we used the DBPedia Sparql Endpoint to query whether a particular noun-phrase or part of the phrase is an entity or not. For this, we used the subclass of the main class “Thing” and an rdf-query to get the results. Though it could annotate many of the entities correctly, but it had few limitations. Firstly, we had to input the noun-phrase using the word exactly as it appears in its dictionary, i.e. it could figure out that “Anna Hazare” is the name of a person, but it did not show any results if we just give “Anna” as an input. Secondly, the DBpedia is not updated frequently, so it may not give results for many of the emerging entities.

ii) Using Alchemy API- based approach: Since, DBPedia does not give a high precision result, we followed another approach of using the Alchemy API. Since, all these API-based approaches always have some upper limit on the number of hits per day that we can query, we dumped the entire corpus in a text file after the pre-processing step and provided the entire text file as an input to the Alchemy API. This not only reduces the number of hits as compared to providing a single tweet at a time, but also enhances the results by providing some context support. We considered four parameters in the results obtained – name/text that identifies the entity, its type, its frequency in the corpus and its relevance score. Though it could figure out entities very precisely from the corpus, but some results were still to be filtered and needed some improvement. Firstly, it included all the names such as “Anna Wintour”, “Anna Calvi” with a comparatively high relevance score of more than 0.49 on a 0-1 scale; even if it was not relevant to the specified event “India against Corruption”. Secondly, it also considered “Jan Lokpal” and “Jan Lokpal Bill” as two different entities. Thirdly, it was not able to determine the type of few entities correctly, such as it associated the type “Person” to the entity “Jan Lokpal Bill”, which should be put under the type “Facility”.

Since, the above two approaches also included entities that were not relevant to the context; we still needed some filtering mechanism to attain higher precision. Thus, we used Wikipedia-based knowledge approach for getting higher relevance.

We selected top 3 Wikipedia pages from Google-search related to a specific event and obtained all the entities with one-hop relationships. Using this set along with the set of top n-grams, we obtained relevant key-terms from every tweet. In this way, we created a bigger set for comparison with our candidate lists obtained: i) noun-phrases ii) from DBPedia-querying iii) from Alchemy API.

The final step was grouping the similar entities together and figuring out the topmost entities talked about. For this, we experimented with 20 types of similarity measures and finally selected two of them that gave a fairly good performance-“QGramsDistance similarity measure with a threshold of 0.75” and “Jaro Similarity with a threshold of 0.8”. QGramsDistance gave the best results, but a little extra grouping was needed that was sufficed by the Jaro similarity measure. Thus, based on the frequency and the above two similarity measures, we were able to obtain the final list of topmost 10 entities(along with their groups of similar strings) talked about.

Experiments

I collect data from Twitter for experiments. The data are collected as follows using Twitter Streaming API: (1) as the first stage, use the most common place names in the U.S. (according to the Wikipedia page [3]) as keywords to query Twitter, (2) start the second stage when collected 5000 user ids from the first stage. Track the 5000 users, obtain their profile and tweets created. In one week period, totally collected 2,187,205 tweets, among which, 7.12% (155,705) tweets mention place names, 1.97% (42,988) tweets have geo-tags, and 57.36% (2,868) users provide location information (might be invalid).

As a preliminary evaluation, a small testing set of 100 tweets manually labeled as gold standard. Each tweet in the gold standard mentions at least one place name and has at least one type of context available. To assign a geo-location to the place name in each tweet, the annotator has to take all available context information into consideration. To be disambiguous, the geo-location is represented as the node in LinkedGeoData. Run the disambiguation algorithm on the testing set with each type of context separately. The result is concluded in the following Figures.

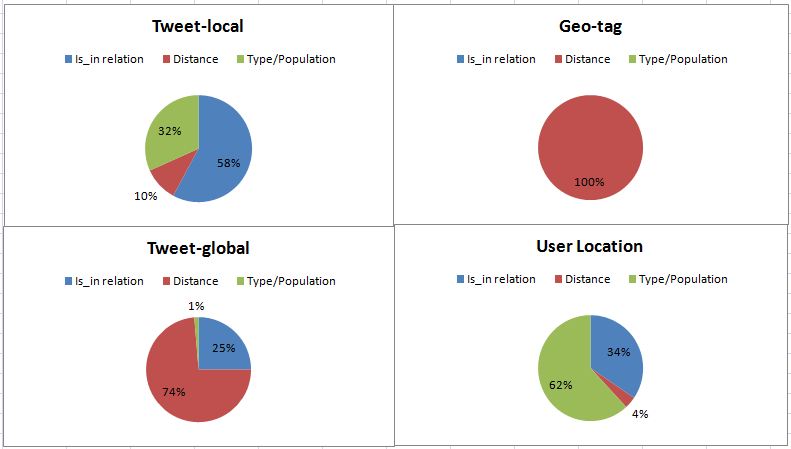

Figure 1 shows the percentage of tweets disambiguated by different methods with each type of context. With the local context (place names in the same tweet), more than half of the toponyms were disambiguated by the is_in relations, which suggests the is_in relations highly possible exist among the place names in the same tweet. For the global context (place names mentioned in different tweets generated by the same user) and geo-tags with the tweets, the distance turns to be the one that contributes most to the disambiguation. It is not difficult to understand. Comparing with the local context, other context information tends to be at the same layer in the hierarchy with the target (e.g., different cities, but not a city and a state). It is surprised to find that the majority of toponyms were disambiguated by prior knowledge (i.e., type and population) but not using the user location in the last case. It might suggest the low quality of user location information, or users discussed less about what happened around them but more about others.

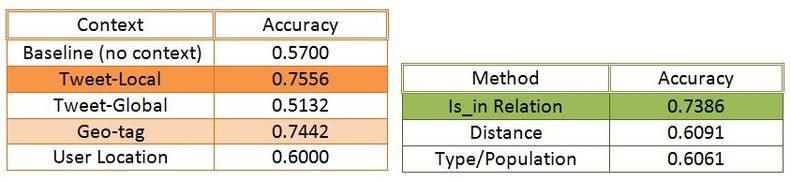

Observing from figure 2, which shows the accuracy of the result obtained with different context and methods. As the baseline, I applied only prior knowledge about type and population to resolve the toponym without any context information. The accuracy is 57% with the baseline. It is again surprised to find that the baseline achieved better performance than using the global context (accuracy 51.32%). Seems global context is not good disambiguator. The best performance is achieved by using the local context as 75.56%, and it is only 1.14% better than using the geo-tags. It makes a lot sense. Intuitively, the place names mentioned adjacent to each other in the text are highly possible relevant to each other. And the place names mentioned in the tweets are highly possible the place where the tweets are sent from. From the method perspective, is_in relations step out as the most convincing way for disambiguation.

Conclusion and Future Work

In this project, we tried to identify and extract different entities (primarily the proper nouns) mentioned in an unstructured corpus collected from “Twitter” on a particular event and followed three approaches for getting the desired results. We found that using Wikipedia as a knowledge base, gave us the best results for figuring out the topmost relevant entities occurring more frequently in the corpus. Also, as the APIs always have some limits on the number of hits per day, we could directly use the candidate set of noun phrases with Wikipedia-based approach to get the most precise results. We would extend the work to Target-specific sentiment analysis to know the polarity of sentiments associated with the prominent entities in an event, i.e. to know “what people think” about a particular entity. Also, we would try to implement the above work for real-time analysis of a particular event on Twitris to know the influential entities in an event and the way they influence people on social media.

References

- “Personal Entity Extraction Filtering using Data Stores” by Kemp Williams, Frankie Patman

- “Exploiting Dictionaries in Named Entity Extraction: Combining Semi-Markov Extraction Processes and Data Integration Methods” by William W. Cohen and Sunita Sarawagi