ElectionPrediction

Examining the Predictive Power of Different User Groups in Predicting 2012 U.S. Republican Presidential Primaries

Lu Chen, Wenbo Wang, and Amit P. Sheth

Contents

Introduction

Existing studies using social data to predict election results have focused on obtaining the measures/indicators (e.g., mention counts or sentiment of a party or candidate) from social data to perform the prediction. They treat all the users equally, and ignore the fact that social media users engage in the elections in different ways and with different levels of involvement. A recent study <ref>Mustafaraj, E. and Finn, S. and Whitlock, C. and Metaxas, P.T.: Vocal minority versus silent majority: Discovering the opionions of the long tail. In: Proceedings of the IEEE 3rd International Confernece on Social Computing, pp. 103--110 (2011)</ref> has shown that social media users from different groups (e.g., "silent majority" vs. "vocal minority") have significant differences in the generated content and tweeting behavior. However, the effect of these differences on predicting election results has not been exploited yet. For example, in our study, 56.07% of Twitter users who participate in the discussion of 2012 U.S. Republican Primaries post only one tweet. The identification of the voting intent of these users could be more challenging than that of the users who post more tweets. Will such differences lead to different prediction performance? Furthermore, the users participating in the discussion may have different political preference. Is it the case that the prediction based on the right-leaning users will be more accurate than that based on the left-leaning users, since it is the Republican Primaries? Exploring these questions can expand our understanding of social media based prediction, and shed light on using user sampling to further improve the prediction performance.

Here, we study different groups of social media users who engage in the discussions of elections, and compare the predictive power among these user groups. Specifically, we chose the 2012 U.S. Republican Presidential Primaries on Super Tuesday [1] among four candidates: Newt Gingrich, Ron Paul, Mitt Romney and Rick Santorum. We collected 6,008,062 tweets from 933,343 users talking about these four candidates in an eight week period before the elections. All the users are characterized across four dimensions: engagement degree, tweet mode, content type, and political preference. We first investigated the user categorization on each dimension, and then compared different groups of users with the task of predicting the results of Super Tuesday races in 10 states. Instead of using tweet volume or the overall sentiment of tweet corpus as the predictor, we estimated the "vote" of each user by analyzing his/her tweets, and predicted the results based on "vote-counting". The results were evaluated in two ways: (1) the accuracy of predicting winners, and (2) the error rate between the predicted votes and the actual votes for each candidate.

User Categorization

Using Twitter Streaming API, we collected tweets that contain the words "gingrich", "romney", "ron paul", or "santorum" from January 10th to March 5th (Super Tuesday was March 6th). Totally, the dataset comprises 6,008,062 tweets from 933,343 users. In this section, we discuss user categorization on four dimensions, and study the participation behaviors of different user groups.

Categorizing Users by Engagement Degree

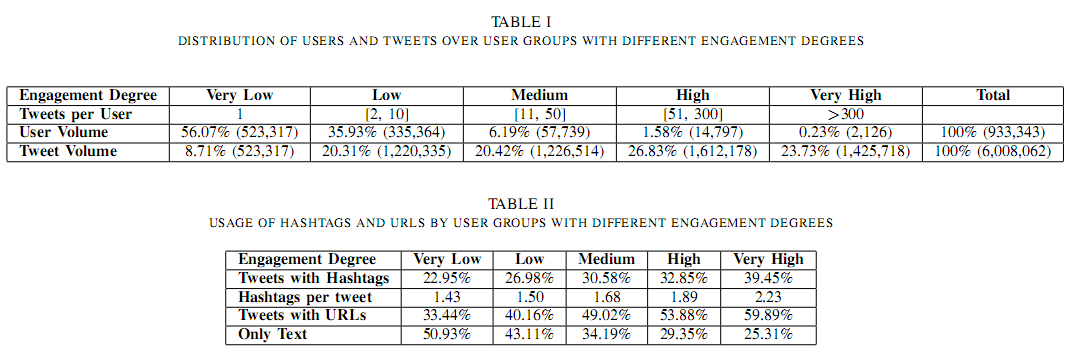

We use the number of tweets posted by a user to measure his/her engagement degree. The less tweets a user posts, the more challenging the user's voting intent can be predicted. An extreme example is to predict the voting intent of a user who posted only one tweet. Thus, we want to examine the predictive power of different user groups with various engagement degrees. Specifically, we divided users into the following five groups: the users who post only one tweet (very low), 2-10 tweets (low), 11-50 tweets (medium), 51-300 tweets (high), and more than 300 tweets (very high). Table I shows the distribution of users and tweets over five engagement categories. We found that more than half of the users in the dataset belong to the very low group, which contributes only 8.71% of the tweet volume, while the very highly engaged group contributes 23.73% of the tweet volume with only 0.23% of all the users. It raises the question of whether the tweet volume is a proper predictor, given that a small group of users can produce a large amount of tweets.

To further study the behaviors of the users on different engagement levels, we examined the usage of hashtags and URLs in different user groups (see Table II. We found that the users who are more engaged in the discussion use more hashtags and URLs in their tweets. Since hashtags and URLs are frequently used in Twitter as ways of promotion, e.g, hashtags can be used to create trending topics, the usage of hashtags and URLs reflects the users' intent to attract people's attention on the topic they discuss. The more engaged users show stronger such intent and are more involved in the election event. Specifically, only 22.95% of all tweets created by very lowly engaged users contain hashtags, this proportion increases to 39.4% in the very high engagement group. In addition, the average number of hashtags per tweet (among the tweets that contain hashtags) is 1.43 in the very low engagement group, and this number is 2.68 for the very highly engaged users. The users who are more engaged also use more URLs, and generate less tweets that are only text (not containing any hashtag or URL). We will see whether and how such differences among user engagement groups will lead to varied results in predicting the elections later.

Categorizing Users by Tweet Mode

There are two main ways of producing a tweet, i.e., creating the tweet by the user himself/herself (original tweet) or forwarding another user's tweet (retweet). Original tweets are considered to reflect the users' attitude, however, the reason for retweeting can be varied, e.g., to inform or entertain the users' followers, to be friendly to the one who created the tweet, etc., thus retweets do not necessarily reflect the users' thoughts. It may lead to different prediction performance between the users who post more original tweets and the users who have more retweets, since the voting intent of the latter is more difficult to recognize.

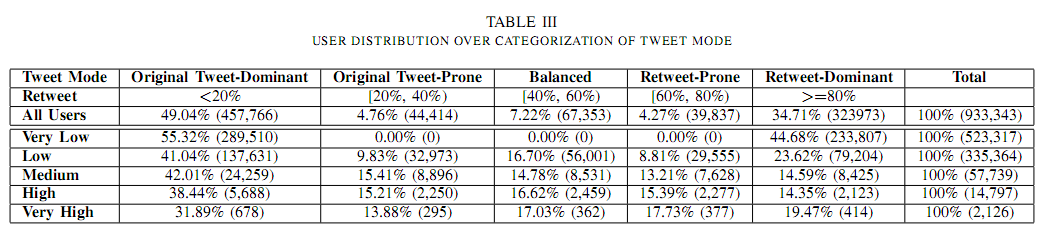

According to users' preference on generating their tweets, i.e., tweet mode, we classified the users as original tweet-dominant, original tweet-prone, balanced, retweet-prone and retweet-dominant. A user is classified as original tweet-dominant if less than 20% of all his/her tweets are retweets. Each user from retweet-dominant group has more than 80% of all his/her tweets that are retweets. In Table III, we illustrate the categorization, the user distribution over the five categories, and the tweet mode of users in different engagement groups. It is interesting to find that the original tweet-dominant group accounts for the biggest proportion of users in every user engagement group, and this proportion declines with the increasing degree of user engagement (e.g., 55.32% of very lowly engaged users are original tweet-dominant, while only 31.89% of very highly engaged users are original tweet-dominant). It is also worth noting that a significant number of users (34.71% of all the users) belong to the retweet-dominant group, whose voting intent might be difficult to detect.

Categorizing Users by Content Type

Based on content, tweets can be classified into two classes -- opinion and information (i.e., subjective and objective). Studying the difference between the users who post more information and the users who are keen to express their opinions could provide us with another perspective in understanding the effect of using these two types of content in electoral prediction.

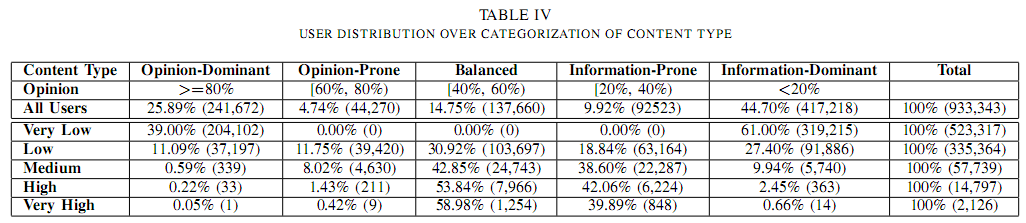

We first identified whether a tweet represents positive or negative opinion about an election candidate using the method proposed in a recent study. <ref>Chen, L. and Wang, W. and Nagarajan, M. and Wang, S. and Sheth, A.P.: Extracting Diverse Sentiment Expressions with Target-dependent Polarity from Twitter. In: Proceedings of the 6th International AAAI Conference on Weblogs and Social Media. (2012)</ref> The tweets that are positive or negative about any candidate are considered opinion tweets, and the tweets that are neutral about all the candidates are considered information tweets. We also used a five-point scale to classify the users based on whether they post more opinion or information with their tweets: opinion-dominant, opinion-prone, balanced, information-prone and information-dominant. Table IV shows the user distribution among all the users, and the users in different engagement groups categorized by content type.

The users from the very low engagement group have only one tweet, so they either belong to opinion-dominant (39%) or information dominant (61%). With users' engagement degree increasing from low to very high, the proportions of opinion-dominant, opinion-prone and information-dominant users dramatically decrease from 11.09% to 0.05%, 11.75% to 0.42%, and 27.40% to 0.66%, respectively. In contrast, the proportions of balanced and information-prone users grow. In high and very high engagement groups, the balanced and information-prone users together accounted for more than 95% of all users. It shows the tendency that more engaged users post a mixture of two types of content, with similar proportion of opinion and information, or larger proportion of information}.

Identifying Users' Political Preference

Since we focused on the Republican Presidential Primaries, it should be interesting to compare two groups of users with different political preferences -- left-leaning and right-leaning. To perform such a comparison, we needed to assess users' political preference.

We collected a set of Twitter users with known political preference from Twellow[2]. Specifically, we acquired 10,324 users who are labeled as Republican, conservative, Libertarian or Tea Party as right-leaning users, and 9,545 users who are labeled as Democrat, liberal or progressive as left-leaning users. We then obtained the follower ids for each of the 1000 left-leaning users (denoted as L) and 1000 right-leaning users (denoted as R) who have the most followers using Twitter Search API. Among the remaining users that are not contained in L or R, there are 1,169 left-leaning users and 2,172 right-leaning users included in our dataset of 2012 Republican Primaries. We denote the set of these 3,341 users as T.

The intuitive idea is that a user tends to follow others who share the same political preference as his/hers. The more right-leaning users one follows, the more likely that he/she belongs to the right-leaning group. Among all the users that a user is following, let N_l be the number of left-leaning users from L and N_r be the number of right-leaning users from R. We estimated the probability that a user is left-leaning as N_l/(N_l+N_r), and the probability that a user is right-leaning as N_r/(N_l+N_r). A user is labeled as left-leaning (right-leaning) if the probability that he/she is left-leaning (right-leaning) is more than a threshold. Empirically, we set the threshold as 0.6 in our study. We tested this method on the labeled dataset T and the result shows that this method correctly identified the political preferences of 3,088 users out of all 3,341 users (with an accuracy of 0.9243).

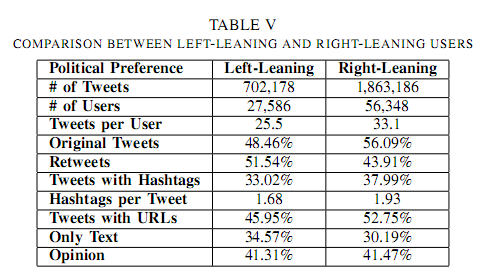

Totally, this method identified the political preferences of 83,934 users. Other users may not follow any of the users in L or R, or follow similar numbers of left-leaning and right-leaning users, thus their political preferences could not be identified. Table V shows the comparison of left-leaning and right-leaning users in our dataset. We found that right-leaning users were more involved in this election event in several ways. Specifically, the number of right-leaning users was two times more than that of left-leaning users, and the right-leaning users generated 2.65 times the number of tweets as the left-leaning users. Compared with the left-leaning users, the right-leaning users tended to create more original tweets and used more hashtags and URLs in their tweets. This result is quite reasonable since it was the Republican election, with which the right-leaning users are supposed to be more concerned than the left-leaning users.

Accomplishments

- I got the idea of this work during taking the Semantic Web course. I was focusing on the text mining and natural language processing, which can be seen as learning implicit knowledge from the data and using this knowledge for deriving target information from text, and didn't work or think much on the formal or explicit knowledge (e.g., the data which is represented as RDF or OWL). Now I realize that my research can benefit from both ways, and this project is a start point.

- I am always interested in the research of relationship, including its representation, extraction, etc. In this project, I am starting to do some work on the relationship involved in the context of event. Based on this work, I might look deeper into this area in the future.

References

- Amit Sheth,Ismailcem Budak Arpinar,Vipul Kashyap, '"Relationships at the Heart of Semantic Web: Modeling, Discovering, and Exploiting Complex Semantic Relationships"', Enhancing the Power of the Internet (Studies in Fuzziness and Soft Computing), 2004.

- Daniel Gruhl,Meenakshi Nagarajan,Jan Pieper,Christine Robson,Amit Sheth, '"Context and Domain Knowledge Enhanced Entity Spotting in Informal Text"', ISWC 2009.

- Daniel Gruhl,Meenakshi Nagarajan,Jan Pieper,Christine Robson,Amit Sheth, '"Multimodal Social Intelligence in a Real-Time Dashboard System"', VLDB Journal, 2010.

- Turney, P.D., '"Extraction of Keyphrases from Text: Evaluation of Four Algorithms', National Research Council, Institute for Information Technology, Technical Report ERB-1051, 1997.