Doozer

Doozer is an on-demand domain model creation application that is available as a Web Service[1]. It uses the power of user-generated content on Wikipedia by harnessing the rich category hierarchy, link graph and concept descriptors.

Contents

Introduction

It is widely agreed on that having a formal representation of domain knowledge can leverage classification, knowledge retrieval and reasoning about domain concepts. Many envisioned applications of AI and the Semantic Web assume vast knowledge repositories of this sort, claiming that upon their availability machines will be able to plan and solve problems for us in ways previously unimaginable \citep{bernerslee2001semweb}.

There are some flaws in this. The massive repositories of formalized knowledge are either not available or do not interoperate well. A reason for this is that rigorous ontology design requires the designer to fully comply with the underlying logical model, e.g. description logics in the case of OWL-DL. It is very difficult to keep a single ontology logically consistent while maintaining high expressiveness and high connectivity of the domain model, let alone several ontologies designed by different groups.

Another problem is that Ontologies, almost by definition, are static, monolithic blocks of knowledge that are not supposed to change frequently. The field of ontology was concerned with the essence and categorization of things, not with the things themselves. The more we venture in the abstract, the less change we will encounter. The notion of what the concept of furniture is, for example, has not changed significantly over the past centuries despite the fact that most individual items of furniture look quite different than they did even ten years ago. Our conceptualization of the world and of domains stays relatively stable while the actual things change rapidly. In the year 2005, about 1,660,000 patent applications were filed worldwide , 3-4Gb of professionally published content are produced daily \citep{ramakrishnan2007peopleweb}. Keeping up with what is new has become an impossible task. Still, more than ever before, we need to keep up with the news that are of interest and importance to us and update our worldview accordingly. One inspiration for this work was given by N.N.TalebÕs Bestseller The Black Swan \citep{taleb2007swan}, a book about the impossibility to predict the future, but the necessity of being prepared for it. The best way to achieve this is to have the best, latest and most appropriate information available at the right time. "It's impossible to predict who will change the world, because major changes are Black Swans, the result of accidents and luck. But we do know who society's winners will be: those who are prepared to face Black Swans, to be exposed to them, to recognize them when they show up and to rigorously exploit them."(N.N.Taleb)

We want to be the first to know about change, ideally, before it happens, at least shortly after. The Black Swan paradigm for information retrieval is thus \textbf{``What will you want to know tomorrow?} The news we want to see need to give us more information than we already have, not reiterate old facts. News delivery also needs to take changes in domains into account, ideally without the userÕs interference, because the user relies on being informed about new developments by the news delivery system itself. Classification based on corpus-learning or user input can only react to changes in the domain, never be ahead of it. Ways of being up-to date with the current information are e.g. following storylines by trying to capture the direction that previous news items were taking and updating a training corpus accordingly or by constantly updating a domain model. Our work is aiming at the latter, assuming that social knowledge aggregation sites such as Wikipedia contain descriptions of new concepts very quickly.

Doozer is part of a pipeline for news classification that aims at identifying content that could be of immediate interest for different domains. A domain is described by an automatically generated domain model. The underlying knowledge repository from which the model is extracted is Wikipedia, likely the fastest growing and most up-to-date encyclopedia available. Its category structure resembles the class hierarchy of a formal ontology to some extent, even though many subcategory relationships in Wikipedia are associative rather than being strict is_a relationships; neither are all categorizations of articles strict type relationships, nor are all articles representing instances. For this reason we refrain from calling our resulting domain model an ontology. This work does not aim at solving the problem of creating formal ontologies. Whereas ontologies that are used for reasoning, database integration, etc. need to be logically consistent, well restricted and highly connected to be of any use at all, domain models for information retrieval can be more loosely connected and even allow for logical inconsistencies.

Model Creation

Doozer is a available in a simplified version as a Web Service that only requires the input of a domain description in the form of a keywords query. The query follows the Lucene query syntax and is by default disjunctive. The web interface is available here.

An introductory video can be found here.

Example Applications

Example Models

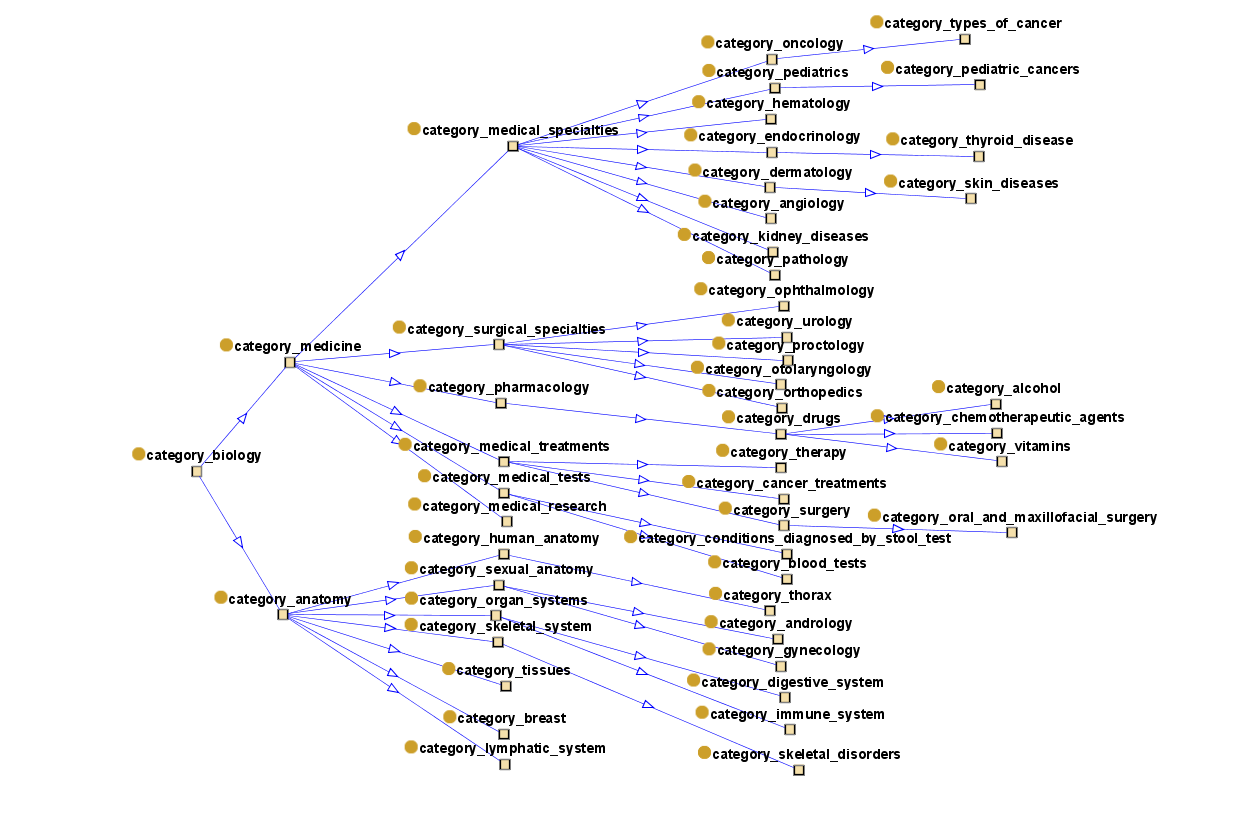

In this section we show some models that were automatically extracted using Doozer. These range from models that were inspired by Information Retrieval and filtering tasks to models that described scientific domains. The IR-inspired models revolved around filtering of ongoing events, such as the Arab Spring in early 2011, specifically the protests in Egypt. The scientific models were built for the domains of Neoplasms and of Cognitive Performance.

The following Images show models that were created on-the-fly for Information Retrieval purposes.