Difference between revisions of "Computational Analogy Making"

(→Publications) |

(→Publications) |

||

| Line 51: | Line 51: | ||

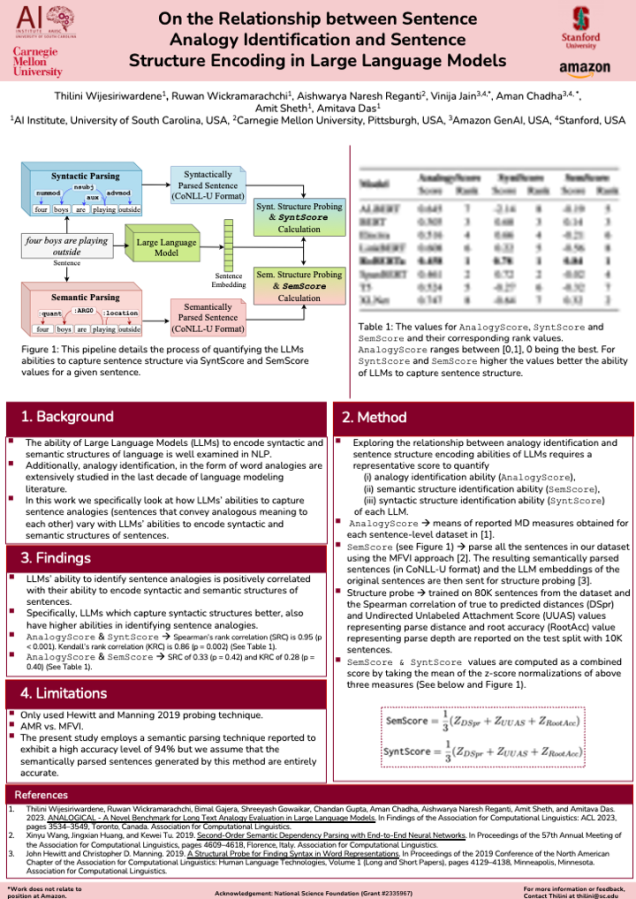

The ability of Large Language Models (LLMs) to encode syntactic and semantic structures of language is well examined in NLP. Additionally, analogy identification, in the form of word analogies are extensively studied in the last decade of language modeling literature. In this work we specifically look at how LLMs’ abilities to capture sentence analogies (sentences that convey analogous meaning to each other) vary with LLMs’ abilities to encode syntactic and semantic structures of sentences. Through our analysis, we find that LLMs’ ability to identify sentence analogies is positively correlated with their ability to encode syntactic and semantic structures of sentences. Specifically, we find that the LLMs which capture syntactic structures better, also have higher abilities in identifying sentence analogies. | The ability of Large Language Models (LLMs) to encode syntactic and semantic structures of language is well examined in NLP. Additionally, analogy identification, in the form of word analogies are extensively studied in the last decade of language modeling literature. In this work we specifically look at how LLMs’ abilities to capture sentence analogies (sentences that convey analogous meaning to each other) vary with LLMs’ abilities to encode syntactic and semantic structures of sentences. Through our analysis, we find that LLMs’ ability to identify sentence analogies is positively correlated with their ability to encode syntactic and semantic structures of sentences. Specifically, we find that the LLMs which capture syntactic structures better, also have higher abilities in identifying sentence analogies. | ||

| − | [[File:Thilini_poster_EACL. | + | [[File:Thilini_poster_EACL.png|900x900px]] |

== Talks == | == Talks == | ||

Revision as of 11:20, 27 March 2024

Background and motivation

A human’s ability to identify objects/ situations in one context as similar to objects/ situations in another context is identified as analogy making. We largely follow the steps listed below when we make analogies. Building representations: These could be hierarchical graphical structures of entities and relationships between the entities in domains. Mapping: Finding corresponding elements in each structure is performed by mapping Usually, mapping is done from a source/ base domain (a familiar domain) to a target domain (a new unfamiliar domain). Inference: Once the mapping is complete, knowledge from the source domain is transferred to the target domain. Some relationships and entities may be absent in the target domain, that are present in the base domain. Identifying these and completing them can be identified as inference.

There have been several attempts at formalizing the process of human analogy making and representing the same computationally through symbolic, connectionist, and hybrid approaches. Gentner’s Structure Mapping Theory(SMT) [1] is paramount in the domain.

People

- Artificial Intelligence Institute University of South Carolina

- External Collaborators

Publications

1. Towards efficient scoring of student-generated long-form analogies in STEM[2]

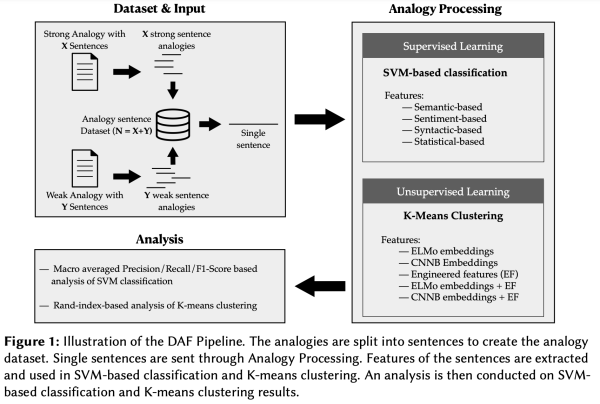

Switching from an analogy pedagogy based on comprehension to analogy pedagogy based on production raises an impractical manual analogy scoring problem. Conventional symbol-matching approaches to computational analogy evaluation focus on positive cases and challenge computational feasibility. This work presents the Discriminative Analogy Features (DAF) pipeline to identify the discriminative features of strong and weak long-form text analogies. We introduce four feature categories (semantic, syntactic, sentiment, and statistical) used with supervised vector-based learning methods to discriminate between strong and weak analogies. Using a modestly sized vector of engineered features with SVM attains a 0.67 macro F1 score. While a semantic feature is the most discriminative, out of the top 15 discriminative features, most are syntactic. Combining these engineered features with an ELMo-generated embedding still improves classification relative to an embedding alone. While an unsupervised K-Means clustering-based approach falls short, similar hints of improvement appear when inputs include the engineered features used in supervised learning.

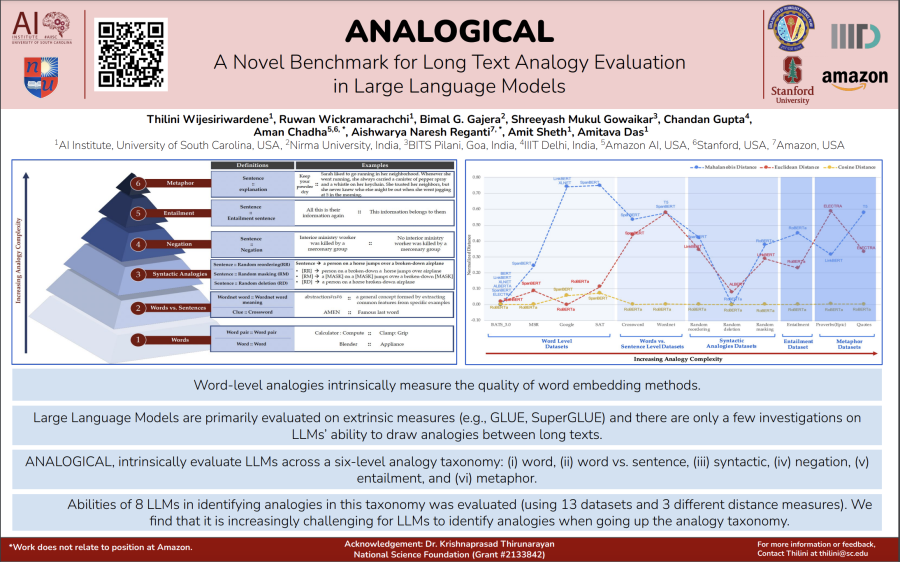

2. ANALOGICAL - A Novel Benchmark for Long Text Analogy Evaluation in Large Language Models [3]

Over the past decade, analogies, in the form of word-level analogies, have played a significant role as an intrinsic measure of evaluating the quality of word embedding methods such as word2vec. Modern large language models (LLMs), however, are primarily evaluated on extrinsic measures based on benchmarks such as GLUE and SuperGLUE, and there are only a few investigations on whether LLMs can draw analogies between long texts. In this paper, we present ANALOGICAL, a new benchmark to intrinsically evaluate LLMs across a taxonomy of analogies of long text with six levels of complexity – (i) word, (ii) word vs. sentence, (iii) syntactic, (iv) negation, (v) entailment, and (vi) metaphor. Using thirteen datasets and three different distance measures, we evaluate the abilities of eight LLMs in identifying analogical pairs in the semantic vector space. Our evaluation finds that it is increasingly challenging for LLMs to identify analogies when going up the analogy taxonomy.

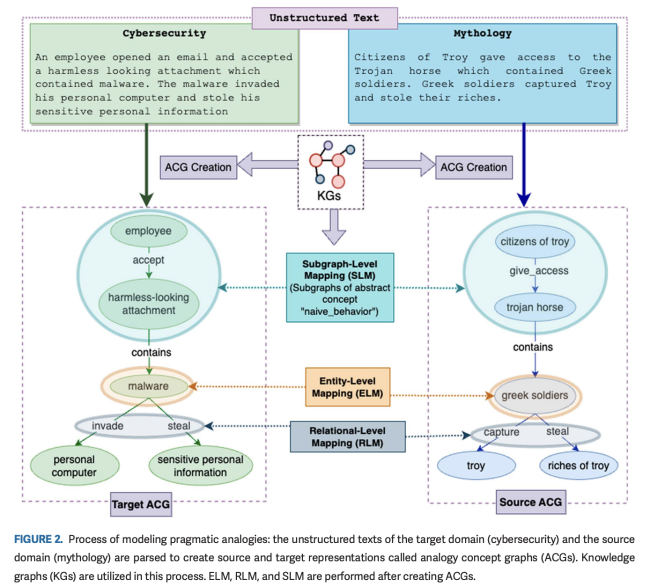

3. Why Do We Need Neuro-symbolic AI to Model Pragmatic Analogies? Extended Version, Abridged Version published in IEEE Intelligent Systems

A hallmark of intelligence is the ability to use a familiar domain to make inferences about a less familiar domain, known as analogical reasoning. In this article, we delve into the performance of Large Language Models (LLMs) in dealing with progressively complex analogies expressed in unstructured text. We discuss analogies at four distinct levels of complexity: lexical analogies, syntactic analogies, semantic analogies, and pragmatic analogies. As the analogies become more complex, they require increasingly extensive, diverse knowledge beyond the textual content, unlikely to be found in the lexical cooccurrence statistics that power LLMs. To address this, we discuss the necessity of employing Neuro-symbolic AI techniques that combine statistical and symbolic AI, informing the representation of unstructured text to highlight and augment relevant content, provide abstraction and guide the mapping process. Our knowledge-informed approach maintains the efficiency of LLMs while preserving the ability to explain analogies for pedagogical applications.

4. On the Relationship between Sentence Analogy Identification and Sentence Structure Encoding in Large Language Models [4]

The ability of Large Language Models (LLMs) to encode syntactic and semantic structures of language is well examined in NLP. Additionally, analogy identification, in the form of word analogies are extensively studied in the last decade of language modeling literature. In this work we specifically look at how LLMs’ abilities to capture sentence analogies (sentences that convey analogous meaning to each other) vary with LLMs’ abilities to encode syntactic and semantic structures of sentences. Through our analysis, we find that LLMs’ ability to identify sentence analogies is positively correlated with their ability to encode syntactic and semantic structures of sentences. Specifically, we find that the LLMs which capture syntactic structures better, also have higher abilities in identifying sentence analogies.

Talks

1. Prof. Amit Sheth presented three of the several of #AIISC 's projects that involve AI for Education at the "Integrating AI into Higher Education: A Faculty Panel Discussion" today, at the event "Exploring the Intersection of AI Teaching" organized by the Center for Teaching Excellence. [5]

2. Session we conducted at the highschool summer camp on learning through analogy